The Dusk of Traditional Factors

For decades, the quantitative investment landscape was dominated by a set of canonical factors—Value, Momentum, Size, Quality. These strategies consistently generated superior returns by exploiting persistent market anomalies. However, their very popularity has eroded their power. As more capital has flowed into these strategies and access to data and technology has been democratized, these alpha niches have become extremely crowded.

“Alpha decay,” the erosion of a signal’s predictive power, has accelerated dramatically. What was once an informational edge is now common knowledge, priced into assets almost instantaneously. Relying solely on these traditional factors today is more a strategy of systematic risk exposure (factor beta) than a genuine pursuit of alpha. To generate superior returns now, it is imperative to move beyond balance sheets and price series and toward new frontiers of inefficiency.

The New Frontier: The Universe of Unstructured Data

True informational advantage in the 21st century resides in unstructured data. A massive volume of textual information is generated daily, containing critical signals about economic health and corporate prospects long before they are reflected in financial statements. This universe includes, but is not limited to:

- News & Financial Media: Releases from agencies like Reuters and Bloomberg that move markets in milliseconds.

- Corporate Regulatory Filings: SEC 10-K and 10-Q reports, earnings call transcripts, and investor presentations that offer deep, nuanced insight.

- Central Bank Communications: Speeches, meeting minutes, and economic projections that dictate global monetary policy.

- Macroeconomic Data: Reports on employment, inflation, and consumer confidence that shape broad market sentiment.

The primary characteristic of this data is its inherent “inefficiency.” Its textual format makes it resistant to traditional quantitative analysis. For a human analyst, processing this avalanche of information in real-time is impossible, and interpretations are slow and prone to cognitive biases. It is precisely at this intersection of high volume, high velocity, and high complexity that an algorithmic approach can establish a decisive, asymmetric edge.

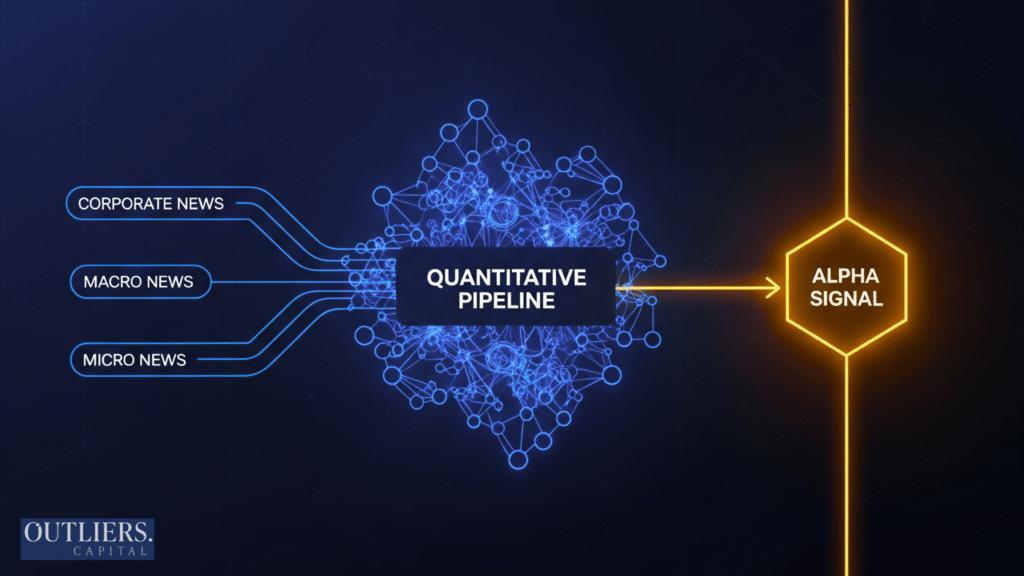

Architecture of the Quantitative Pipeline: From Information to Signal

To capitalize on this opportunity, we have engineered a modular and robust quantitative pipeline. Its architecture is designed to transform the chaos of raw text into the clarity of a mathematical trading signal. The process flows logically from data acquisition to factor generation, ensuring integrity, replicability, and the rigorous prevention of lookahead bias at every stage.

The operational flow is broken down into three main phases:

- Ingestion & Structuring: Capturing and cleaning textual data from multiple sources.

- Analysis & Classification: The AI engine that interprets and scores the content.

- Aggregation & Factor Construction: The synthesis of predictions into a pure investment signal.

Data Acquisition and Pre-processing

The quality of a quantitative signal is capped by the quality of its input data. Our process begins with the acquisition of institutional-grade data, ensuring maximum data fidelity and, critically, timestamp accuracy, a non-negotiable prerequisite for any strategy aiming to capture market reaction to new information.

Once ingested, the raw text undergoes a rigorous pre-processing regimen to prepare it for analysis by our models:

- Cleaning & Normalization: We cleanse the data, removing boilerplate legal text from reports and irrelevant content like advertisements to isolate the essential semantic content.

- Tokenization & Lemmatization: The text is broken down into its constituent units (tokens), and words are reduced to their lexical root (lemma). This allows for consistent information aggregation (e.g., “run,” “running,” and “ran” are treated as the concept “run”).

- Named Entity Recognition (NER): A critical step where key entities such as company names, persons, and products are identified and disambiguated. Our system differentiates between “Apple Inc.” (the company, ticker AAPL) and the fruit, ensuring the signal is attributed to the correct asset.

The Analysis Engine: Beyond a Single Model

A single AI model, no matter how advanced, possesses inherent biases from its architecture and training data. Relying on one model is a fragile bet. Therefore, the core of our analysis engine is an ensemble of specialized AI agents. This approach is based on the principle of diversification: by combining the “opinions” of multiple expert models—which engage in a form of Agent-to-Agent (A2A) debate to converge on ideas—the variance of the prediction error is reduced, creating a far more robust and adaptive system.

The Mathematics of Alpha: Aggregation and Factor Construction

The Dynamic Weighting Model

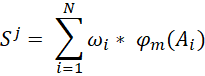

The synthesis of our ensemble’s predictions is not a simple average. It is a mathematically formalized aggregation process that dynamically weights each agent’s contribution. The final sentiment or impact score (S^j) for a given entity or topic (j) is defined as:

Where:

- S^j: The final, aggregated sentiment score (or feature) for j,

- j: represents the feature j for J features

- Ai: Represents each individual AI agent in the ensemble,

- phi m: The normalized sentiment prediction from agent i.

- omega i: It’s a dynamic weight. This weight is not arbitrary; it is determined by out-of-sample performance metrics, model confidence, and the current market regime, thereby maximizing the signal’s robustness.

Calculating the Dynamic Weights (Omega i)

The weight wi is not a static parameter but a function that evolves in real-time, reflecting our confidence in each agent under current conditions. It is determined by a combination of three factors, with recent historical performance being most critical for confidence allocation.

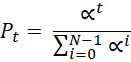

To mathematically model the decaying importance of past performance, we implement an exponential decay weighting scheme. This ensures that predictions generated today are weighted significantly more than those from a week ago, a principle at the core of our adaptive architecture. The time-decay performance component (Pt) for a prediction on a specific day (t) is calculated as:

Where:

- Pt: The normalized weight for a prediction from day t.

- alfa

: The decay factor, controlling how quickly a prediction’s importance fades.

- t: The number of days since the prediction was generated (t=0 is today, t=1 is yesterday).

- N: The total number of days in our performance lookback window.

In addition to this temporal decay, weight allocation is adjusted by:

- Model Confidence: Our models output a confidence score alongside each prediction. Predictions made with high certainty receive a greater weight.

- Market Regime Detection: Our system classifies the current market environment into different “regimes” (e.g., high/low volatility, risk-on/risk-off). Agent weights are adjusted accordingly, amplifying the voice of the experts most suited to the prevailing conditions.

Constructing the Orthogonal Alpha Factor

The aggregated score S^j is a potent “raw signal,” but it is not yet a pure investment factor. To turn it into alpha, it must be isolated from common sources of market return. The raw signal undergoes a statistical neutralization process, where its correlation with systematic risk factors is removed, including:

- Market Beta: Exposure to the overall market movement.

- Style Factors: Correlation with factors like Momentum, Value, Size, etc.

- Industry Sector: Inherent biases toward a specific sector.

The result of this “cleaning” process is an orthogonal alpha factor: a source of return that is, by design, statistically independent of traditional risk and return drivers, thus offering true diversification potential to a portfolio.

The Pursuit of a Sustainable Edge: Constant Recalibration

In quantitative markets, the only constant is change. No model, however sophisticated, is a permanent solution. Alpha is a moving target. Our framework is not a static system but one that actively counteracts signal decay. Efficacy is maintained through the rigorous and constant recalibration of every pipeline component—from incorporating new data sources and retraining AI agents to adjusting the dynamic weighting algorithms. This is a process of perpetual adaptation.

The Future of Alpha is Synthetic

We have entered an era where competitive advantage no longer stems from mere access to information, but from the superior ability to synthesize it. The widespread adoption of generic LLMs only accelerates the decay of simple signals, making the pursuit of truly unique, robustly processed signals indispensable. The future of cutting-edge asset management lies in the creation of “synthetic insights”: the fusion of human expertise (in designing systems) with the superhuman scale, speed, and rigor of artificial intelligence (in testing, validating, and executing). Our approach embodies this symbiosis, transforming the art of investing into a rigorous, scalable science.

To discuss how our quantitative approach can provide a diversified alpha source for your portfolio, please contact us or visit our website.https://outliers.capital/